It’s Ignite season, and Microsoft is bringing a new wave of features to help organizations embrace AI safely and confidently. As AI reshapes daily workflows, teams need fast, secure, and compliant access, without slowing down innovation. The latest enhancements in Microsoft Entra Internet Access which are in Public Preview focus exactly on that: preventing risky AI usage, strengthening data protection, and giving IT deeper visibility and control over secure AI access across the organization. Let’s clearly look at these updates and the motto behind them!

AI-centric Capabilities of Microsoft Entra Internet Access

In today’s AI-powered workplace, organizations are racing to harness the benefits of generative tools like Microsoft Copilot and other SaaS-based AI platforms. But with this acceleration comes a critical challenge: facilitating AI adoption while managing risks effectively.

Imagine an employee trying to speed up their work uploads internal documents to a AI tool, not realizing the platform may store prompts and responses in the cloud. At the same time, someone else in the company uses an unsanctioned AI chatbot to create reports, completely outside IT’s visibility and controls. And with that comes a growing risks like data leakage, shadow AI usage, and compliance gaps that organizations can easily overlook.

This is where Microsoft Entra Internet Access makes a difference with four new enhancements. It goes beyond a traditional Secure Web Gateway, acting as a Secure Web and AI Gateway built specifically to secure AI access in today’s workplace. Here are four powerful, AI-centric capabilities that help organizations embrace AI safely and smartly:

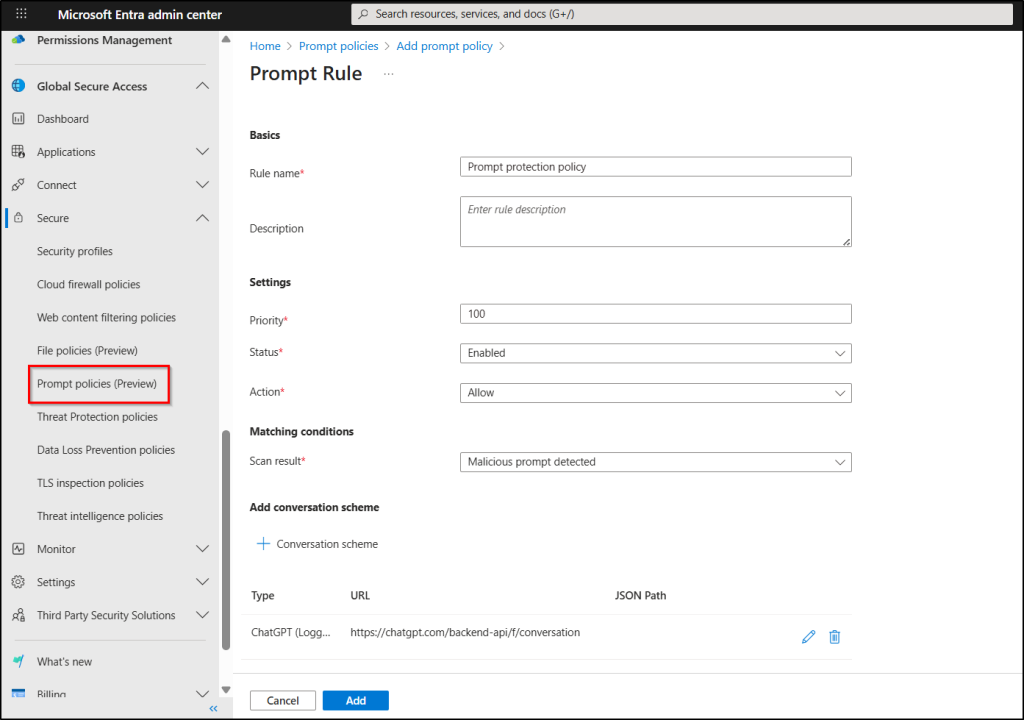

Prompt Injection Protection for Secure AI Prompts

Imagine an employee uses a public AI tool to generate documentation, unaware that a hidden prompt from a previous interaction is manipulating the AI’s response and within seconds, the AI follows those buried commands instead of the user’s request — a classic prompt injection attack.

That’s where Prompt shield comes in. This capability provides real-time protection against malicious prompt injections, one of the top risks for Large Language Models (LLMs). By extending Azure AI Prompt Shields to the network layer, Microsoft Entra Internet Access enforces guardrails consistently across all AI apps, agents, and LLMs without requiring code changes or per-app updates.

What happens next with prompt shield configured:

- The adversarial prompt is blocked instantly before it ever reaches the AI model, stopping the jailbreak attempt in real time.

- No sensitive customer data is exposed, and the AI assistant is prevented from performing any unauthorized action the attacker intended.

- The block happens consistently, whether the employee is using a laptop, mobile browser, or desktop app, because prompt shield enforces protection at the network level across all devices and applications.

With this in place, organizations can confidently embrace AI tools while keeping prompt-based threats at bay.

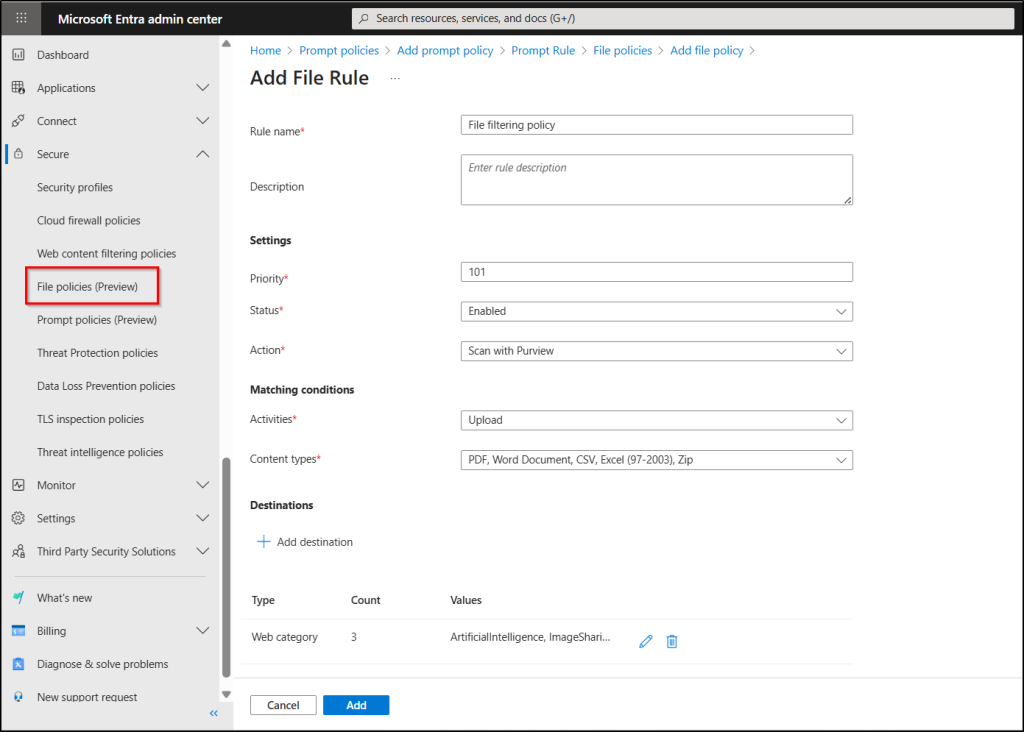

Network File Filtering to Prevent AI Data Leaks

Imagine a HR specialist uploads an employee records into a generative AI tool to rewrite the summary. In seconds, sensitive data is outside the organization’s control, an accidental but serious data leak.

This is exactly why network file filtering matters. Microsoft’s solution combines Microsoft Purview’s data classification with identity-centric security policies in Global Secure Access, creating an advanced, network-layer DLP that works in real time. By inspecting file content and evaluating user risk instantly, organizations can block sensitive files from being uploaded to AI tools, without disrupting productivity. It helps prevent sensitive data uploads to unauthorized AI tools or SaaS platforms by enforcing file policies based on content, source, and destination.

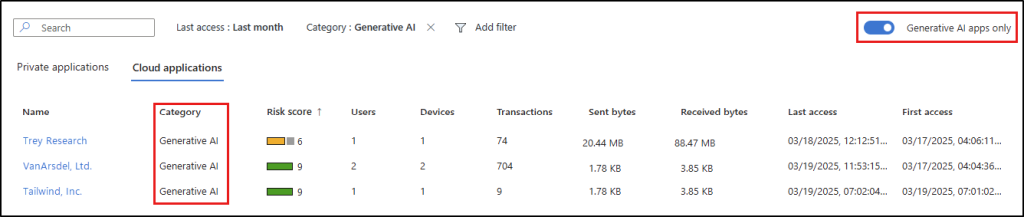

Shadow AI Detection for Unauthorized AI Usage

Now, imagine an IT team notices unusual outbound traffic hitting unknown AI-related domains. After digging deeper, they realize multiple employees have been using unapproved AI chatbots to draft emails, summarize documents, and analyze data.

The tools seem harmless, but without governance or monitoring, they introduce serious risks: no control over where data goes, no audit trails, and no assurance that sensitive information isn’t being stored or shared externally. This is the real-world threat of Shadow AI.

With Microsoft Entra Internet Access, security teams gain deep visibility into AI tool through Cloud Application Usage Analytics and Defender for Cloud Apps risk scoring. This empowers them to detect trends, flag high-risk apps, and act, whether that means applying Conditional Access or blocking access entirely.

Block Unsanctioned MCPs to Control AI Endpoints

Imagine a scenario where developer integrates an internal tool with an external AI model to automate data enrichment. What they don’t realize is that the connection is made through an unverified Model Context Protocol (MCP) endpoint, one did not approve or monitored by IT.

Within minutes, internal metadata begins flowing to an untrusted service, creating a silent but serious compliance and security risk. This is exactly the kind of gap organizations face when MCP endpoints aren’t governed or restricted. Microsoft Entra Internet Access comes to the rescue and helps organizations control and block unsanctioned MCPs by filtering access to AI endpoints at the network level.

Closing Thoughts

As AI adoption accelerates across every industry, securing access at the network layer is no longer optional, it’s foundational. With Microsoft Entra Internet Access, organizations gain the visibility, control, and compliance needed to embrace AI with confidence rather than caution.

And this is just the beginning; we’ll be publishing deep-dive blogs on each capability soon to help you make the most of Microsoft Entra in the AI era. Stay tuned! 😊