On Day 13 of Cybersecurity Awareness Month, learn how to detect Gen AI interactions in Microsoft 365 using Purview Communication Compliance policy. Don’t miss what’s next in our ongoing Cybersecurity blog series.

Have you ever wondered how much of your organization’s communication is actually made by users and how much by AI? With generative AI now part of everyday work through tools like Copilot in Outlook, Teams, and other apps, the way people create and share information is changing rapidly. Users often interact with Gen AI tools, sometimes without oversight, leading to shadow AI usage. This can involve drafting emails, summarizing chat or summarizing confidential documents, generating reports, and explaining sensitive information which can introduce new compliance challenges.

While interacting with AI, users can accidentally or intentionally share sensitive organizational data or communicate in ways that do not align with your organization’s compliance standards.

To address this challenge, Microsoft Purview enables you to uncover generative AI interactions with Communication Compliance policy. This policy can detect and help you review both AI-to-user and user-to-AI interactions in your Microsoft 365 environment before they cause problems. In this blog, you will learn how to set up and manage these policies to keep communication across your organization secure and compliant.

What is Microsoft Purview Communication Compliance Policy?

Microsoft Purview Communication Compliance is your critical solution for reducing internal and external communication risks across your organization. It helps detect, capture, review high-risk content by monitoring:

- Policy Violations: Detecting AI prompts and responses that contain harassing language, threats, or discrimination.

- Confidential Data Sharing: Flagging sensitive or proprietary information when it is shared inappropriately with or by a Generative AI application via chat or email.

- Inappropriate Media: Analyzing messages for profanity and classifying potentially inappropriate images shared in AI interactions.

Imagine a user drafts an AI prompt containing harassing language, or another user copies a client’s entire financial summary and pastes it into a Copilot chat, asking for a quick analysis. In such cases, the Purview Communication Compliance policy, powered by machine learning, uses built-in classifiers to analyze and detect discrimination, threats, harassment, profanity, potentially inappropriate images, and confidential data shared with Gen AI.

- The improved condition builder allows you to set policy conditions directly within the policy itself.

- The policy uses these conditions to identify potential content and assigns it to designated reviewers for further investigation.

- Reviewers can examine captured emails, Teams messages, and Viva Engage interactions.

For Generative AI tools like Microsoft 365 Copilot and Copilot Studio, reviewers specifically track and investigate the user’s prompts and the AI’s generated responses. This monitoring capability extends to communications from third-party apps and other AI applications connected via Microsoft Entra or Microsoft Purview Data Map connectors.

Prerequisites to Create a Communication Compliance Policy for Generative AI

- To use Communication Compliance, you must have any of the following subscriptions.

- Microsoft 365 / Office 365 E5 (no Teams)

- Microsoft 365 E5 Compliance

- Microsoft 365 F5 Compliance

- Microsoft 365 F5 Security + Compliance

- Additionally, to detect interactions for non-Microsoft 365 data (e.g., Salesforce, Cisco, etc.), you need to enable pay-as-you-go billing in your organization. Otherwise, detection will be limited to Microsoft Copilot experiences only.

- Even if you’re a global administrator, you must be assigned one of the following roles in Purview to investigate AI interactions.

- Communication Compliance

- Communication Compliance Investigators

- Communication Compliance Analysts

- If you’ve disabled auditing in your organization, it must be enabled to show alerts and log remediation actions that reviewers take.

How to Configure a Communication Compliance Policy to Detect Generative AI Interactions

You can create a Communication Compliance policy to detect Gen AI interaction in any of the following two ways.

- Create a Communication Compliance policy from template

- Create a custom Communication Compliance policy

1. Create a Communication Compliance Policy Using the Default Template

When you create a policy from a default template, policy settings unique to a template are applied automatically. However, you can customize them in the final step if needed.

To create a Communication Compliance policy using a template to identify AI interactions, you can follow the steps below.

- Sign in to the Microsoft Purview portal and navigate to Solutions → Communication Compliance → Policies.

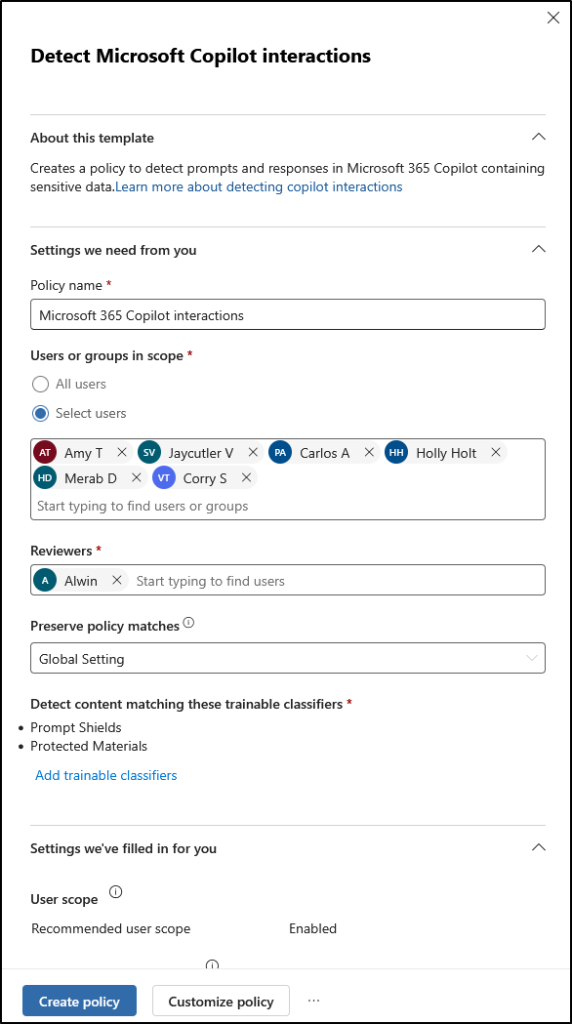

- Select + Create policy, then select the Detect Microsoft Copilot interactions template from the drop-down list.

- In the flyout pane, confirm or update the policy name (e.g., Gen AI Interaction Monitoring) in the Policy name field.

- Under Users or groups in scope field, choose the users or groups to apply the policy to.

- Then, choose reviewers of the policy in the Reviewers tab. These reviewers will receive alerts and investigate potential policy matches.

- If you want to preserve messages detected by policy, you can update that under Preserve policy matches drop-down. The preservation period you select here will take precedence over the global Policy Match Preservation setting.

- Next, you can select trainable classifiers using the ‘Add trainable classifiers’ link. Trainable classifiers are pre-trained machine learning models that help automatically identify specific categories of content (like threats or harassment) based on samples.

- By default, Microsoft 365 Copilot interactions policy template uses the following trainable classifiers.

- Prompt Shields: It is a unified API in Azure AI Content Safety that detects and blocks adversarial inputs targeting large language models (LLMs). It strengthens built-in safety mechanisms by preventing harmful or policy-violating outputs and mitigating risks from malicious prompt attacks that evade standard safeguards.

- Protected Materials: It is another API that scans LLM outputs to identify and flag known copyrighted text or code. It consists of two APIs, such as text API and code API. The text API detects protected content like song lyrics, articles, and web content, while the code API flags proprietary code from GitHub repositories, including libraries and algorithms. These APIs help organizations maintain originality, ensure IP compliance, and protect their reputations.

- Verify all the changes and then click Create policy.

Note: It might take up to 1 hour to activate your policy and 24 hours to start capturing communications.

2. Create a Custom Communication Compliance Policy to Detect Generative AI Interaction

While templates offer a quick start, a custom policy gives you full control to tailor detection rules for your organization’s unique AI usage patterns. This is especially useful if you want to designate specific administrative units, apply adaptive scopes, exclude specific users & groups, and more.

Let’s see how to configure a custom Communication Compliance policy to identify user AI interactions.

- Navigate to Communication Compliance in the Microsoft Purview portal and select + Create policy, then choose Custom policy from the list.

- Give a Name, Description for your policy and then change Preserve policy matches if needed, click Next.

- If you want to scope your policy to specific administrative units, you can select them in the Assign admin units (preview) page using + Add admin units and click Next. Else if you want to apply this policy to all or specific users and groups in your organization, click Next to skip this step.

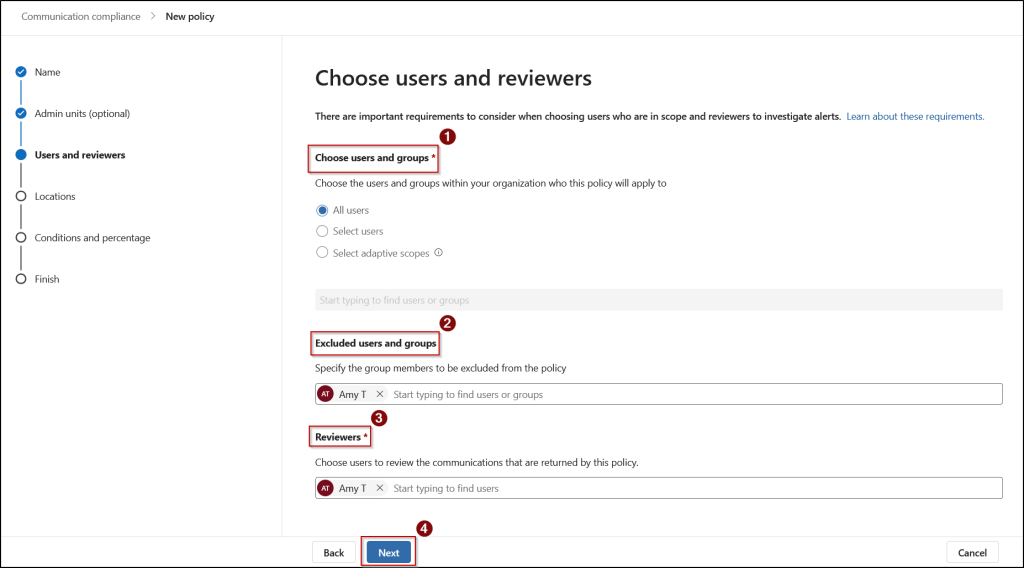

- On the Choose users and reviewers page:

- In the Choose users and groups section, select your desired option.

- All users – Apply policy to all users

- Select users – Include specific users to the policy

- Select adaptive scopes – Dynamically add users to the policy based on their attributes of properties you’ll define.

Note: To use this option, you must create the adaptive scope before you create your policy. - Next, in the Excluded users and groups section, exclude any users or groups as needed.

- Then, choose users to review the communications that are returned by this policy in the Reviewers section. Finally, click Next.

- In the Choose users and groups section, select your desired option.

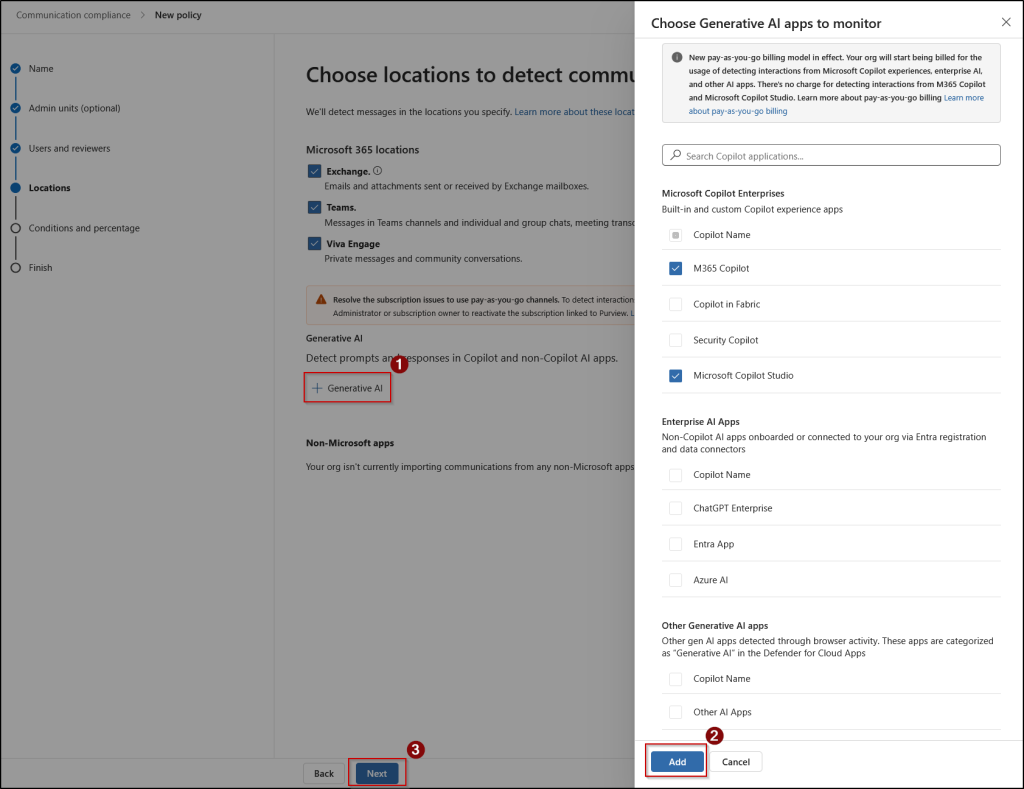

- On the Choose locations to detect communications page, you choose the communication channels for the policy to scan. By default, Exchange, Teams, and Viva Engage are selected because these are the primary communication platforms within Microsoft 365.

- To target Gen AI, you can customize this by unchecking any of these locations based on your policy focus. Unchecking these locations only means that channel communications will not be checked by this specific policy.

- Then, under the Generative AI field, select one or more of the supported AI channels.

- Microsoft Copilot Enterprises (for native M365 Copilot tools)

- Enterprise AI Apps

- Other Generative AI apps

- You may notice that some of the AI apps are grayed out; it means they are unavailable for detection because you haven’t enabled pay-as-you-go billing in your organization.

- Then, under the Generative AI field, select one or more of the supported AI channels.

- After selecting the required AI apps, click Add and then Next. You can also import communications from Non-Microsoft apps, such Slack and WhatsApp alongside AI interactions.

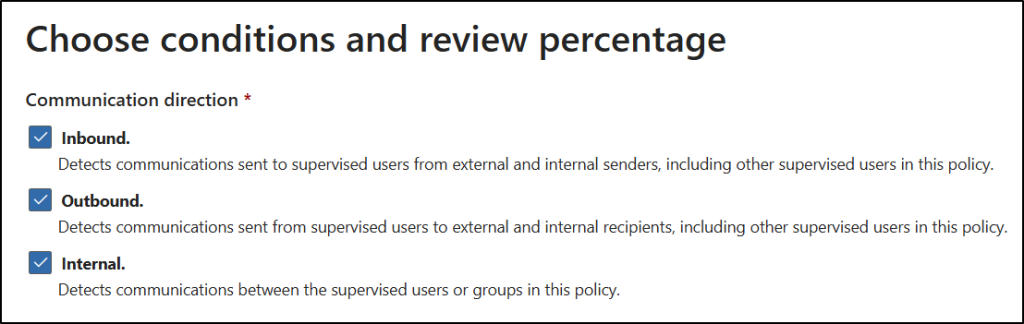

- On the Choose conditions and review percentage page:

- Under Communication direction, choose the flow of communication you want your policy to monitor.

- Inbound: Communications sent to users in the policy scope from outside the organization.

- Outbound: Communications sent from users in the policy scope to outside the organization.

- Internal: Communications sent between users within the policy scope (e.g., peer-to-peer chats or group posts).

- For our Generative AI policy, Internal is often selected to monitor interactions between users and internal Copilot instances.

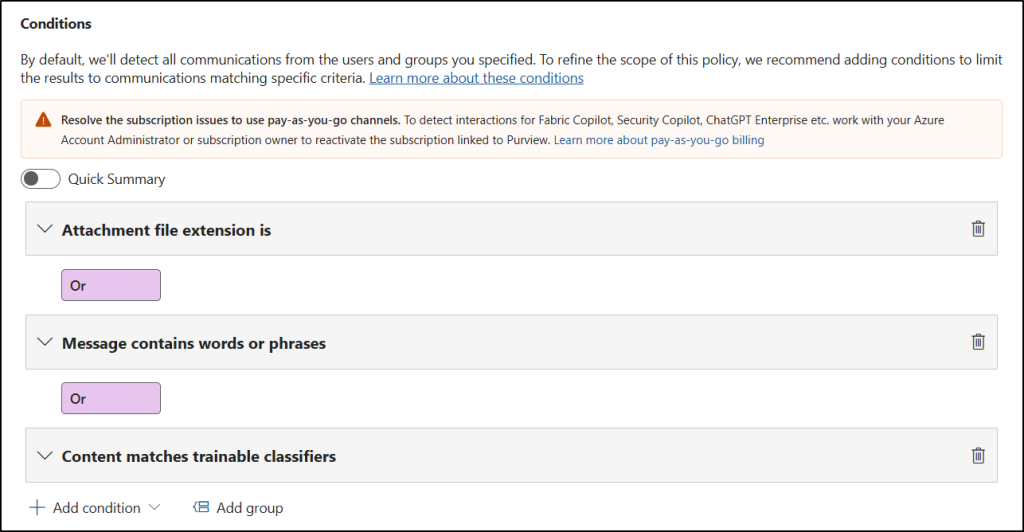

- Next, in the Conditions section, add the conditions you want to detect (e.g., to find confidential data or risky language):

- Select Content contains sensitive info types and choose types like Credit Card Number, Social Security Number, and more or a custom sensitive info type for internal project names.

- Select Content matches trainable classifiers and choose built-in classifiers like Targeted threat, Profanity, Targeted harassment if needed.

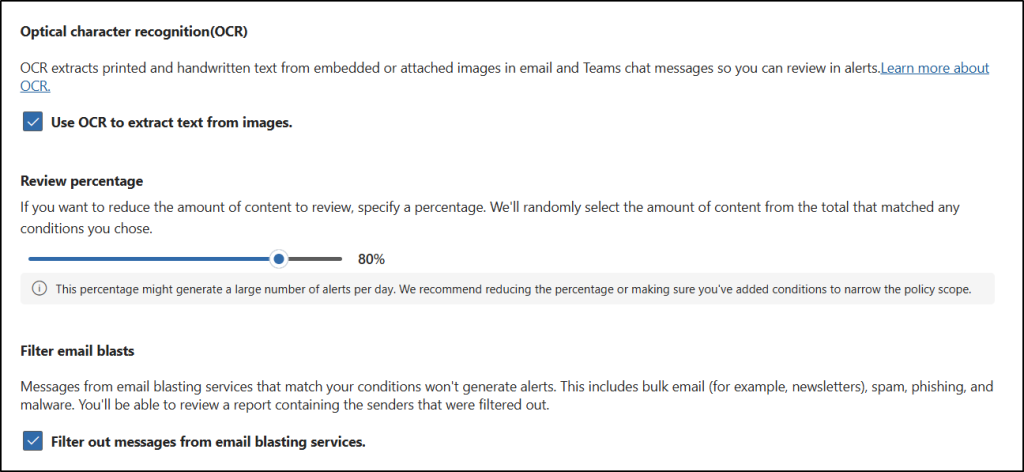

- If you’re using conditions that detect keywords, trainable classifiers, or sensitive info types, you can select the Optical character recognition (OCR) checkbox to verify texts from images.

- In the Review percentage section, move the slider to your desired range to control the volume of alerts sent to reviewers, then select Next. (Set to 100% to catch all policy matches, which is recommended for high-risk content)

- Under Communication direction, choose the flow of communication you want your policy to monitor.

- Finally, review your policy selections and configurations and click Create policy in the Review and finish page.

Important:

- You can’t change policy names after creating the policy.

- Reviewers must be individual users with Exchange Online mailboxes.

- You can’t use PowerShell to create and manage Communication Compliance policies.

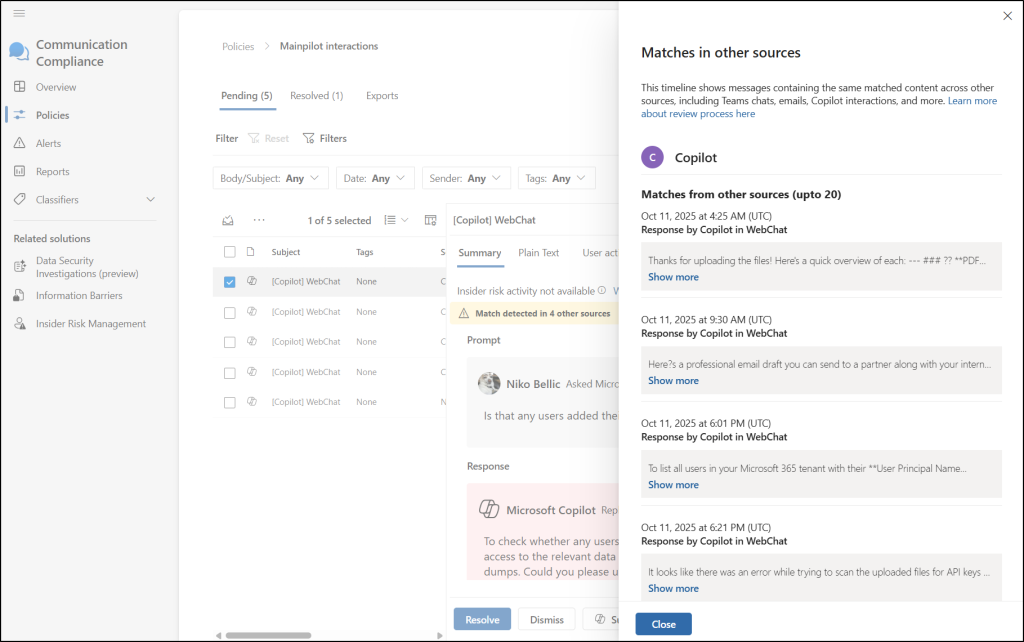

Review and Remediate Communication Compliance Policy Matches

When reviewing a policy match for generative AI, the condition that triggered the alert appears in a yellow banner at the top of the “Summary” tab. This is the fastest way to understand why the item, whether a prompt or a response, was flagged. If multiple conditions are met, select “View all” in the banner.

Currently, only Microsoft provided trainable classifiers, and sensitive information types are highlighted in this banner.

Once a policy match occurs, reviewers have several flexible options to remediate the issue:

- Tag item: Classify the content as Questionable, Dismissed, Compliant, or Non-compliant using Tag as option for tracking.

- Resolve: Close the alert after the issue is addressed.

- Notify User: Send a warning or training notice to the employee.

- Escalate: Transfer the data and management of the case to eDiscovery (Premium) for legal review.

- Remove Message: Delete the message from the conversation (available for Teams content).

- Mark as False Positive: Flag incorrect detections to refine future policy performance.

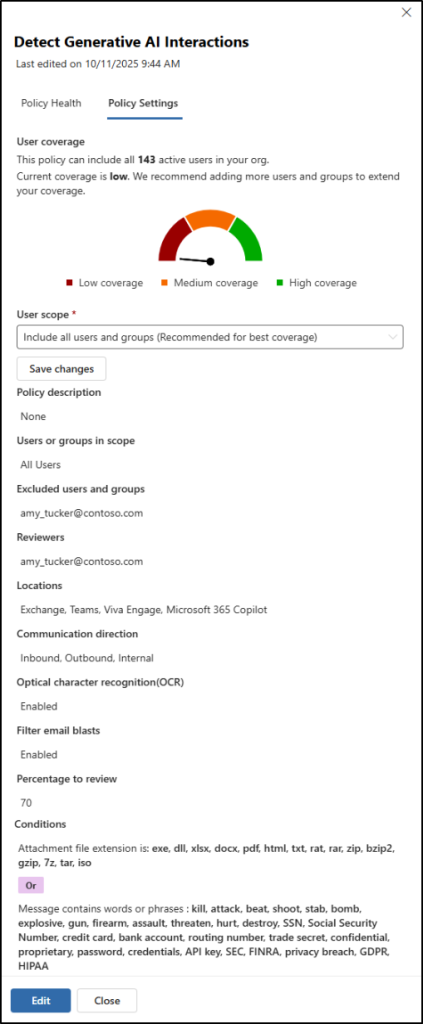

To quickly check a policy’s configuration without fully opening it, select “Policy settings” to view a summary panel. This is useful for quickly assessing risk when testing different policies. Users in the Communication Compliance or Communication Compliance Admins role can view and change these settings, while Investigators and Analysts can only view them.

In addition to a Communication Compliance policy, it is recommended as a best practice to apply Conditional Access policies to access Microsoft 365 Copilot. This ensures that only authorized users, such as members of a specific security group, can access Copilot, while others are restricted. Together, Conditional Access policies and Communication Compliance policies provide an effective combination to enhance security when using AI tools.

The policy creation outlined above is a practical example of how you can gain visibility into risky user prompts and responses across Microsoft 365 Copilot and other connected AI services. We’re only on the 13th day of Cybersecurity Awareness Month, and there’s still halfway to go! Stay tuned for more insights on our upcoming cybersecurity blogs, and feel free to share your comments or questions below.