On Day 9 of Cybersecurity Awareness Month, learn how to configure Conditional Access policies to protect Generative AI apps such as Microsoft 365 Copilot and Security Copilot. Stay tuned for upcoming blogs in the Cybersecurity blog series.

Nowadays, AI is transforming the workplace. ✨ Imagine a scenario where Copilot is part of your Microsoft 365 organization’s everyday workflows. Employees use it to draft emails, analyse data, and generate insights, rapidly accelerating productivity.

But what if a threat actor gains access to a compromised user account? Without proper controls, Copilot could be used to exfiltrate business data and access sensitive content directly through prompts instead of exploring other Microsoft 365 services. That is why organizations need smarter controls, not just broad fences. ⚠️Tools like DSPM for AI can help continuously monitor and protect sensitive data used within Copilot services.

“Security isn’t just about preventing breaches; it’s about enabling safe innovation.”

So how can you ensure that users use Microsoft 365 Copilot and Microsoft Security Copilot safely without risking data leaks or compliance violations? The answer lies in configuring Conditional Access policies to protect Generative AI apps.

In this blog, we will cover practical steps to protect your AI environment, enforce phishing-resistant MFA, restrict insider-risk users, and ensure employees acknowledge organizational AI usage policies before accessing Copilot services.

How to Set Up Conditional Access Policies for Specific Generative AI Services?

Now, let’s go through the steps to create Conditional Access policies that ensure only trusted users can access Microsoft 365 Copilot and Microsoft Security Copilot. To achieve this, follow these steps:

- Create service principals for Generative AI services in Microsoft 365

- Create Conditional Access policy for Microsoft 365 Copilot services

1. Create Service Principals for Generative AI Services in Microsoft 365

To apply Conditional Access policies that protect Generative AI apps such as Microsoft 365 Copilot and Microsoft Security Copilot, it is crucial to add them as service principals. This is because these apps are not listed in the Conditional Access application picker. Selecting other apps like Office 365 would unintentionally restrict access to the entire suite, rather than just the Copilot services.

You can create the service principals for Microsoft 365 copilot and Microsoft Security Copilot in either of the following ways.

- Create service principals for Copilot apps in Microsoft 365 using PowerShell

- Configure service principals for Copilot apps using Graph Explorer

Create Service Principals for Copilot Apps in Microsoft 365 Using PowerShell

To create separate service principals for Copilot services is using PowerShell, follow the steps below to set it up:

- Connect to Microsoft Graph PowerShell with the necessary permissions.

1Connect-MgGraph -Scopes "Application.ReadWrite.All"

-

Use the following cmdlets to create service principals for each generative AI service.

Here, the App IDs correspond to Microsoft’s published identifiers for the Copilot services.1234# Create service principal for Microsoft 365 CopilotNew-MgServicePrincipal -AppId "fb8d773d-7ef8-4ec0-a117-179f88add510"# Create service principal for Microsoft Security CopilotNew-MgServicePrincipal -AppId "bb5ffd56-39eb-458c-a53a-775ba21277da"

-

Once the commands run successfully, verify that both service principals have been created by running the

Get-MgServicePrincipalcmdlet.1Get-MgServicePrincipal -All | Where-Object { $_.AppId -in @("fb8d773d-7ef8-4ec0-a117-179f88add510", "bb5ffd56-39eb-458c-a53a-775ba21277da") }

Configure Service Principals for Copilot Apps Using Graph Explorer

To set up service principals for Microsoft 365 Copilot and Microsoft Security Copilot is by using Graph Explorer, follow the steps below.

- Sign in to the Microsoft Graph Explorer.

- Change the request type to POST and use the following endpoint.

1https://graph.microsoft.com/v1.0/servicePrincipals - In the request body, provide the JSON details for each service, using the published App IDs.

- For Microsoft 365 Copilot:

1234{"appId": "fb8d773d-7ef8-4ec0-a117-179f88add510","displayName": "Microsoft 365 Copilot"}

- For Microsoft Security Copilot:

1234{"appId": "bb5ffd56-39eb-458c-a53a-775ba21277da","displayName": "Microsoft Security Copilot"}

- For Microsoft 365 Copilot:

- Click Run query to run the request. If successful, Graph Explorer will return the details of the newly created service principals.

- To verify that the service principals were created correctly, you can use a GET request with a filter on the display name, such as:

Similarly, repeat the query for ‘Microsoft Security Copilot’.1https://graph.microsoft.com/v1.0/servicePrincipals?$filter=displayName eq 'Microsoft 365 Copilot'

With both service principals in place, you’re now ready to define how and when users can access these AI services.

2. Create Conditional Access Policy for Microsoft 365 Copilot Services

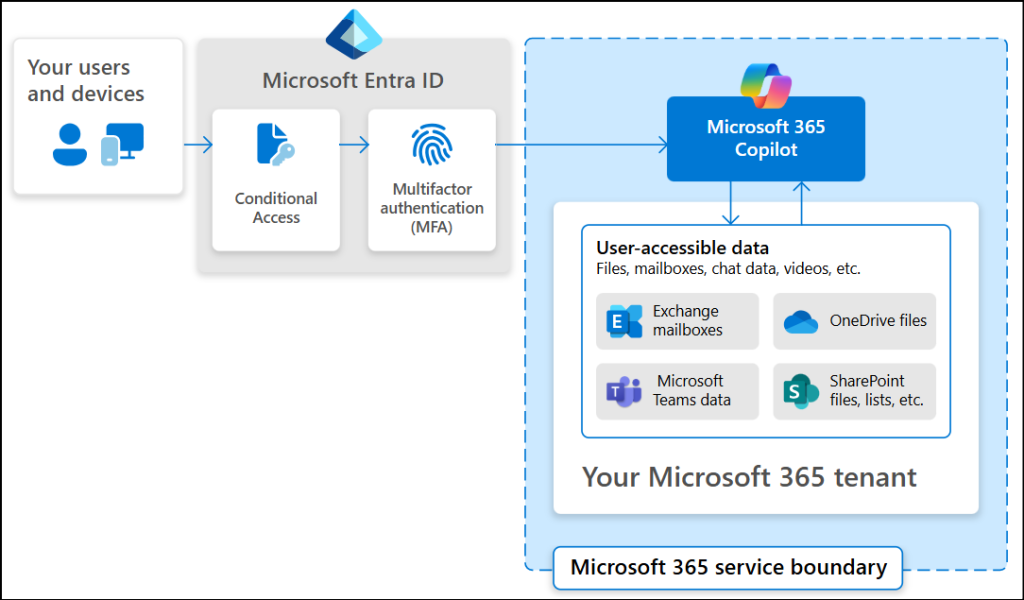

To strike the right balance between productivity and protection, you can now configure Conditional Access policies to protect Generative AI apps that align with your organization’s security posture. Below is an image that depicts how a Conditional Access policy protects access to Generative AI services.

Here are three examples you can choose from based on your organization’s needs.

- Require phishing-resistant multifactor authentication for Gen AI services

- Restrict Gen AI services access to compliant devices at moderate level risk

- Configure Terms of Use acceptance for Microsoft 365 Copilot Services

Require Phishing-Resistant Multifactor Authentication for Gen AI Services

Some employees may still be using basic authentication like email, phone number, etc. to access Copilot, which poses a serious security risk. To every user session, it is important to enforce phishing-resistant multifactor authentication (MFA) for all users of Microsoft 365 Copilot and Microsoft Security Copilot. Follow the steps below to set this up.

- Sign in to the Microsoft Entra admin center at least as a Conditional Access Administrator.

- Navigate to Entra ID → Conditional Access → Policies and select +New Policy.

- Provide a descriptive name for the policy. Here, I call it as “Require MFA for Gen AI Apps Access”.

- Now, under Assignments → Users, include All users and exclude specific accounts like break-glass accounts to ensure continued access. You should also exclude specific service accounts and service principals so that automated background process continues to function without being interrupted.

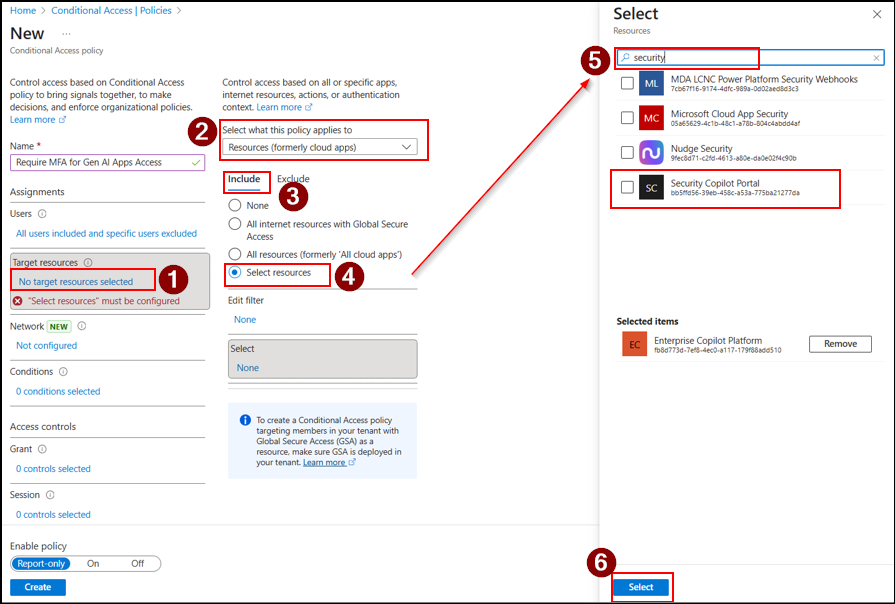

- Under Target resources, choose Resources (formerly cloud apps) from the Select what this policy applies to dropdown.

- Next, go to Include → Select Resources, and click Select. Choose Enterprise Copilot Platform and Security Copilot Portal, then click Select to confirm.

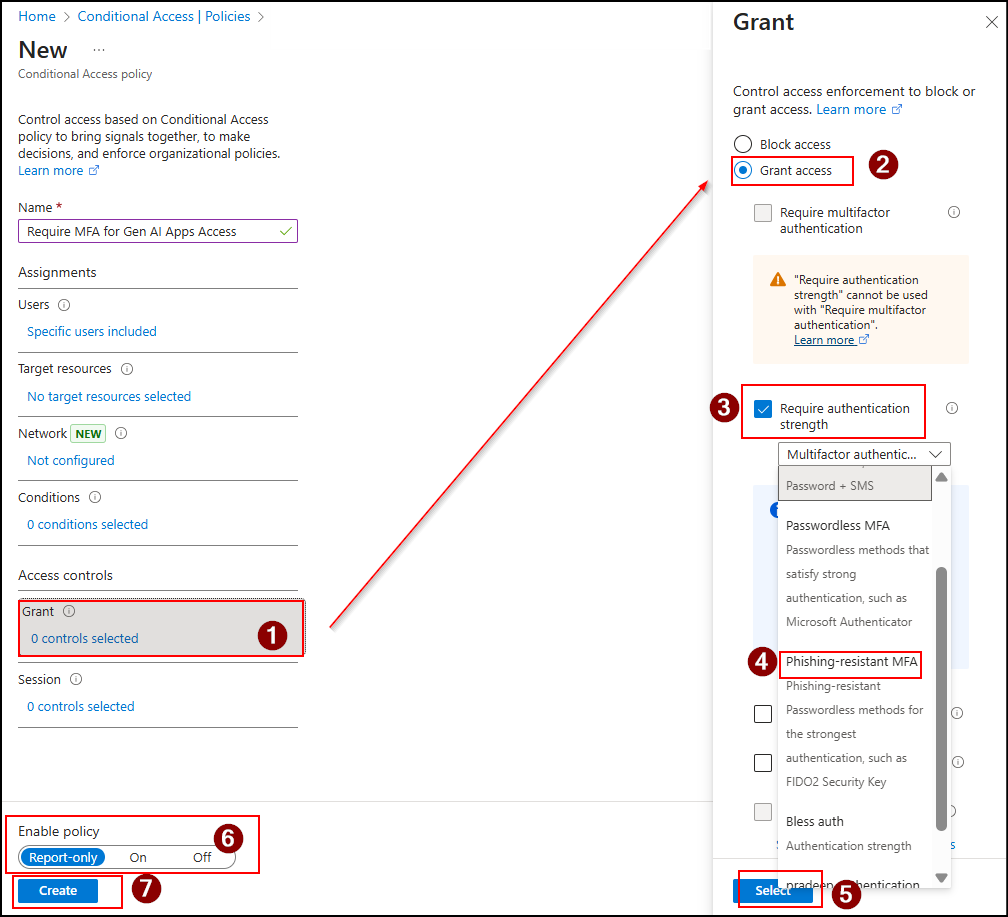

- Under Access controls → Grant, select Grant access, and check the Require authentication strength option.

- Next, select the Phishing-resistant MFA from the list and click Select.

- Review your settings, set Enable policy to Report-only and select Create.

- After validating the impact in read-only mode, switch the Enable policy toggle to On.

Tip: Organizations can also layer Conditional Access with Just-in-Time access to GenAI tools using web content filtering and Entra access packages. This approach grants temporary, approved access only when required, minimizing risks of continuous exposure.

How Does the Policy Impact Users?

Once the above Conditional Access policy is applied, user access behaviour changes based on whether they are included or excluded from the policy.

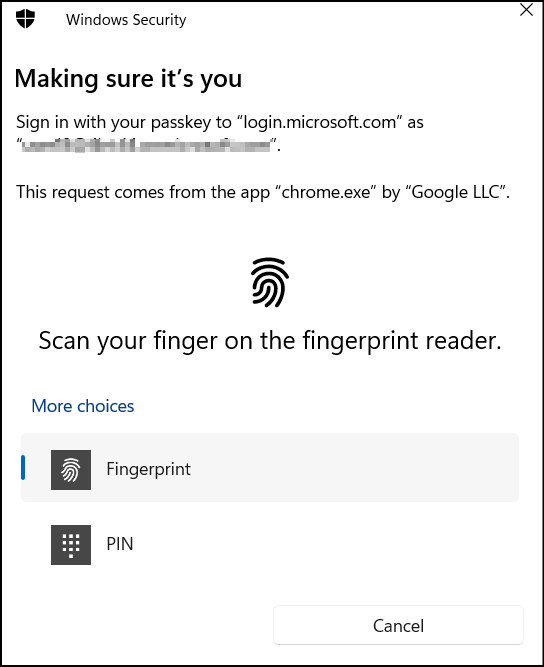

When users included in the policy try to access Microsoft 365 Copilot or Security Copilot, they’ll be prompted to sign in using Microsoft 365 Passkeys or using Windows Hello for Business. Once they provide the correct credentials, they will be allowed to sign in.

Restrict Users with Insider Risk from Accessing Generative AI on Non-Compliant Devices

Employees flagged for insider risk may access AI-powered tools like Microsoft 365 Copilot or Security Copilot from non-compliant devices. This will increase the risk of security violations and potential data leakage. For example, an employee under investigation for data misuse could use Microsoft 365 Copilot on an unmanaged personal laptop to summarize confidential project files or extract sensitive client information.

If your organization manages devices through Microsoft Intune, you can enforce Conditional Access policies that allows only non-risky users from compliant devices to access Copilot. This ensures that only trusted, secure endpoints can connect to reduce insider risk and prevent data exposure from unmanaged devices.

To achieve this, first configure Adaptive Protection in Microsoft Purview and then create a device compliance policy in Microsoft Intune. Once these steps are complete, you can configure a device-based Conditional Access policy to restrict Copilot access to trusted devices only. Follow these steps to implement it.

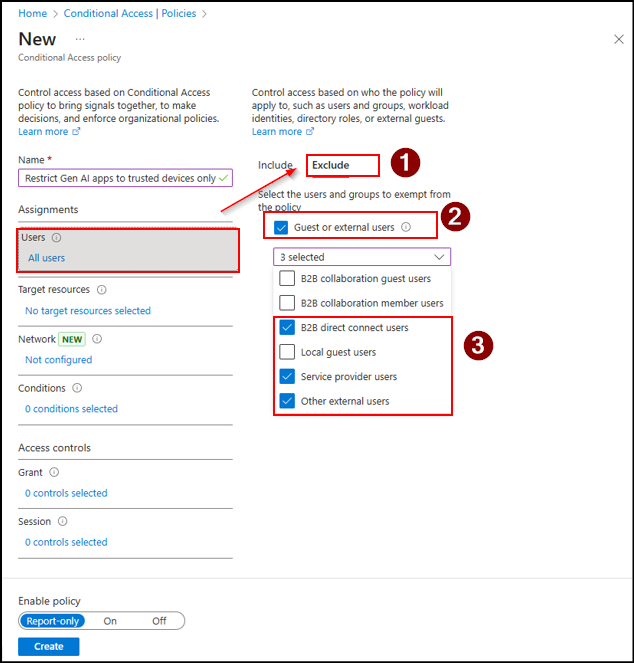

- Go to the Policies tab in the Conditional Access page and click +New policy to create a policy.

- Give your policy a descriptive name. For example, “Restrict Gen AI apps to trusted devices only”.

- In the Assignments → Users section:

- Under Include, select All users.

- Under Exclude, select your organization’s break glass accounts that should remain exempt from the policy, and click Select.

- Then, check the Guest or external users box, select B2B direct connect, Service provider users and Other external users. This ensures that these external accounts aren’t restricted by the policy, allowing trusted partners to maintain uninterrupted access for collaboration.

- Under Target resources, select Resources (formerly cloud apps). Then go to Include → Select resources → Select and choose Enterprise Copilot Platform and Security Copilot.

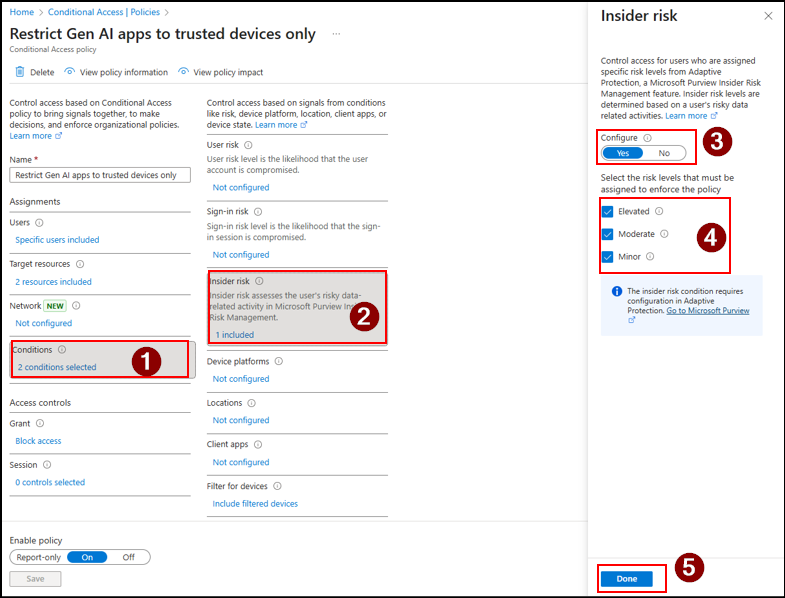

- Now, comes the most important part. Under Conditions → Insider risk, set Configure to Yes. Then, Select the risk levels that must be assigned to enforce the policy based on your need and click Done.

- Under Access controls → Grant → Grant Access, select Require device to be marked as compliant and click Select.

- Now, confirm all your settings and set the Enable policy toggle to Report-only.

- After validating the impact in read-only mode, switch the Enable policy toggle to On.

How the Policy Works?

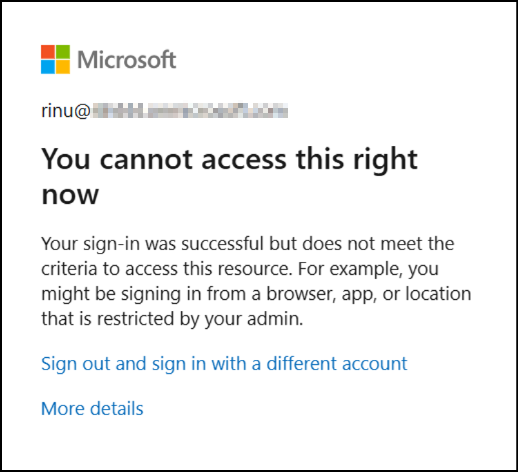

Once the policy is applied, users marked as insider risk who try to access Microsoft 365 Copilot or Microsoft Security Copilot from a non-compliant device will be prompted with the error message:

“You cannot access this right now. Your sign in was successful but does not meet the criteria to access this resource. For example, you might be signing in from a browser, app, or location that is restricted by your admin.”

Configure Terms of Use Acceptance for Microsoft 365 Copilot Services

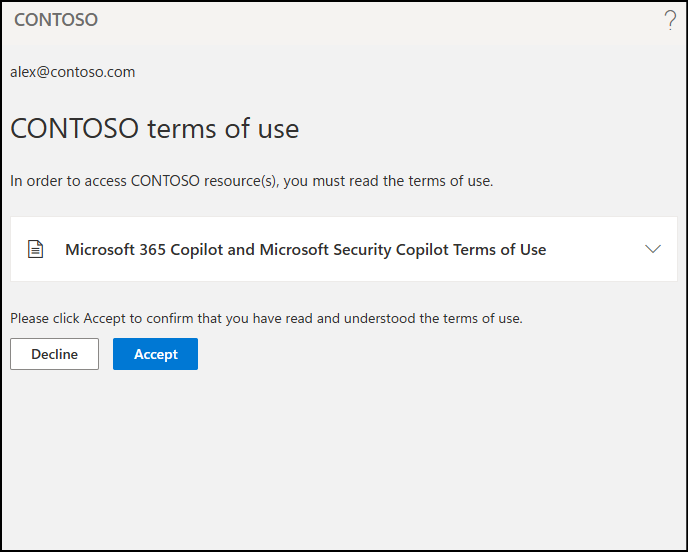

Employees often start using Microsoft 365 Copilot or Security Copilot without fully understanding their organization’s data handling, privacy, or responsible AI usage policies. This lack of awareness can lead to unintentional data sharing, misuse of AI-generated content, or compliance violations.

To address this, first set up Microsoft Entra terms of use with Conditional Access. Then, enforce a Conditional Access policy as described below to grant access only after users agree to the terms. This ensures that users review and acknowledge your organization’s AI usage guidelines before accessing Copilot services.

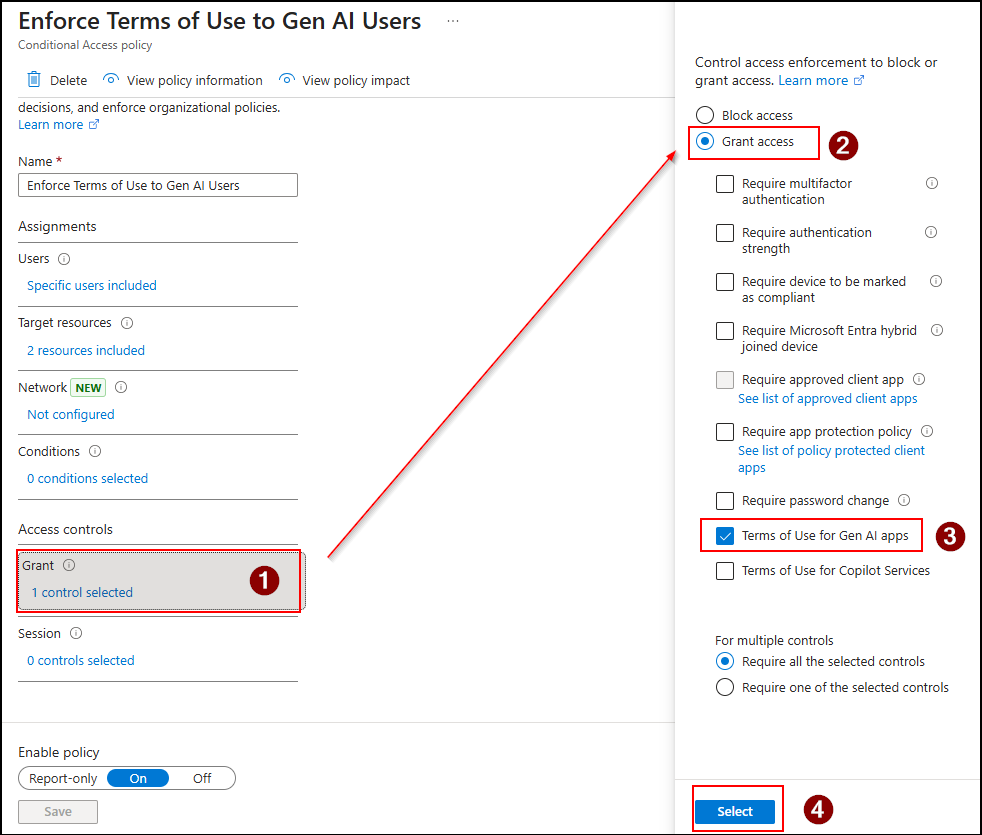

- Go to the Policies page in the Conditional Access tab and click +New policy.

- Provide a descriptive name to your policy. Here, I’ve given as ‘Enforce Terms of Use to Gen AI Users’.

- Under Assignments → Users, include All users.

- Then under Target resources, select Resources (formerly cloud apps). Then go to Include → Select resources → Select and choose Enterprise Copilot Platform and Security Copilot.

- Now, under Access Controls → Grant, select Grant Access and choose the terms of use policy you created in the previous step. Next, click Select.

- Now, confirm all your settings and set the Enable policy to Report-only.

- After validating the impact in read-only mode, switch the Enable policy toggle to On.

What’s the Impact of the Policy?

Once the policy is applied, users will not be allowed to use Copilot services until they read and accept the Terms of Use. When they sign in, a prompt will appear stating:

“You will need to authenticate to access this resource. Select ‘Continue’ to authenticate your account.”

After clicking Continue, they’ll be redirected to a page displaying the terms of use. Once they click Accept, they will be granted access to Copilot.

Wrapping Up

The Conditional Access policies outlined above are just examples of how you can restrict access to generative AI enterprise services in Microsoft 365. Tailoring these policies to meet your organization’s specific needs will help shape your security posture exactly the way you require. You can also leverage Conditional Access policies with web content filtering in Microsoft Entra to block GenAI tools within your tenant. These policies ensure protection against unauthorized internet access.

We’re only on the 9th day of Cybersecurity Awareness Month, and there’s still a long way to go! Stay tuned for more insights in our upcoming cybersecurity blogs, and feel free to share your comments or questions below.