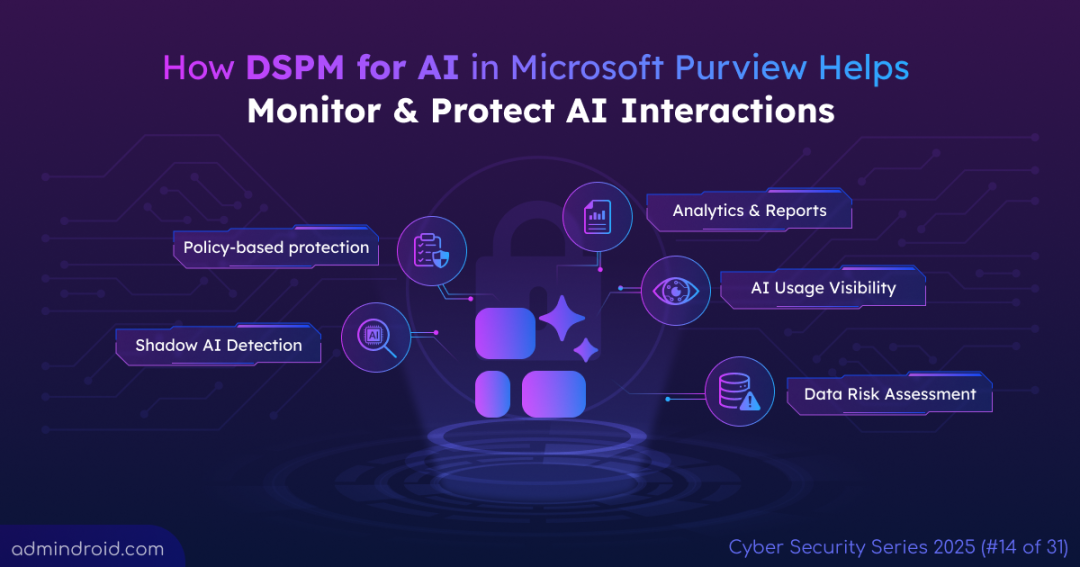

On Day 14 of Cybersecurity Awareness Month, let’s discuss how DSPM for AI helps organizations gain visibility into AI interactions and protect sensitive information. Keep following along for more blogs in the Cybersecurity blog series.

Generative AI tools like ChatGPT, Microsoft 365 Copilot, and Google Gemini are transforming the way we work — streamlining tasks, automating processes, and generating insights faster than ever. However, this growing reliance on AI also brings new security challenges. One often-overlooked risk is Shadow AI: employees using AI applications outside IT oversight. Without visibility into these interactions, organizations may not know what sensitive information is being processed by AI or how employees might be oversharing confidential data. To address these risks, organizations need a robust solution to monitor AI activity.

In this blog, we will explore what Data Security Posture Management for AI (DSPM for AI) is and how it can help monitor and manage AI activity in your organization.

What is DSPM for AI in Microsoft Purview?

DSPM for AI is essentially DSPM in Microsoft Purview with a GenAI-aware approach, designed to help organizations safely adopt AI. It allows you to view AI interactions, investigate activities, and implement policies to protect and govern sensitive data – all from a single, centralized platform.

License and Role Requirements for DSPM for AI

To use DSPM for AI, your organization must have either a Microsoft 365 E5 or a Microsoft 365 E5 Compliance subscription.

Roles and role groups with full access (view, create, and edit) in DSPM for AI:

- Microsoft Entra ID Compliance Administrator

- Microsoft Entra ID Global Administrator

- Microsoft Purview Compliance Administrator role group

Roles and role groups with view-only access in DSPM for AI:

- Microsoft Purview Security Reader role group

- Purview Data Security AI Viewer

- AI Administrator

- Purview Data Security AI Content Viewer (for AI interactions only)

- Purview Data Security Content Explorer Content Viewer (for AI interactions and file details during data risk assessments)

Benefits of DSPM for AI:

Key benefits of using DSPM for AI include:

- Gain complete visibility into how employees use AI applications across your organization.

- Monitor and manage all AI apps and agents from a single, unified dashboard in Microsoft Purview.

- Detect and prevent sensitive information from being accidentally shared through AI interactions.

- Implement one-click policies to automatically detect risky AI behavior and enforce data protection rules.

- Identify overshared content in SharePoint sites and detect files with overly broad permissions before data exposure occurs.

- Access audit-like logs showing prompts, responses, and sensitive information for thorough investigation of security incidents.

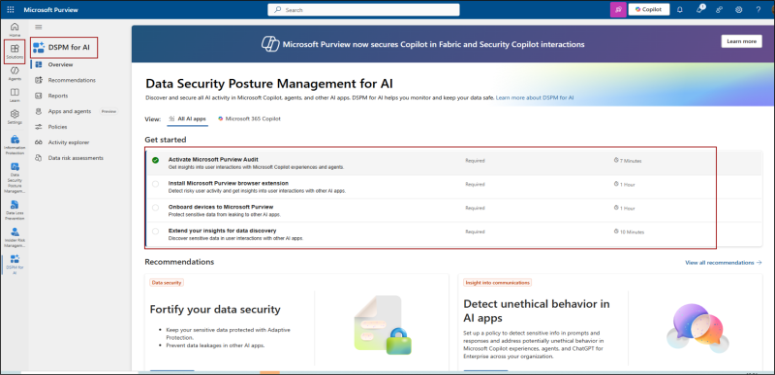

Configure DSPM for AI in Microsoft Purview

Follow these steps to access DSPM for AI:

- Open the Microsoft Purview portal.

- Go to Solutions and select DSPM for AI.

- In the Overview page, navigate to the All AI apps tab.

To begin with, enable all the available settings under the Get Started section.

1. Activate Microsoft Purview Audit: Turning on Microsoft Purview Audit is important to gain visibility into user activities within Microsoft Copilot and other AI agents. For most tenants, auditing will be enabled by default. If it isn’t, you can turn on auditing from the Microsoft Purview portal.

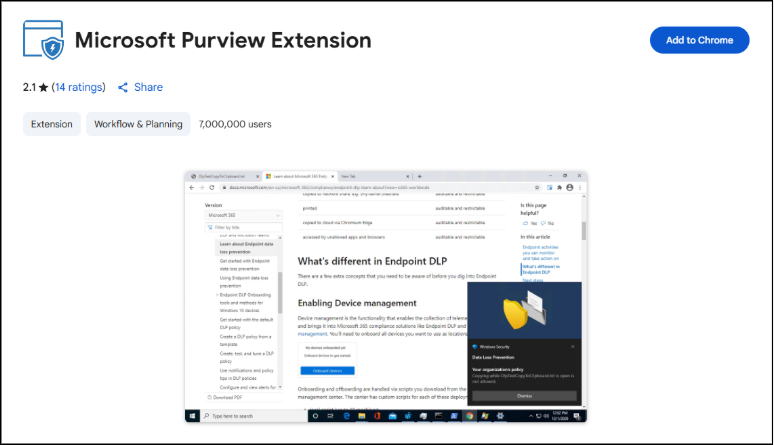

2. Install Microsoft Purview browser extension: The Microsoft Purview Compliance Extension (available for Edge, Chrome, and Firefox) collects signals that help detect accessing or sharing sensitive data through DSPM for AI-supported websites. Install the extension on the browsers your organization uses to monitor such interactions effectively.

3. Onboard Devices to Microsoft Purview: Onboarding user devices to Microsoft Purview enables monitoring of user activity and enforcement of data protection policies while interacting with AI applications.

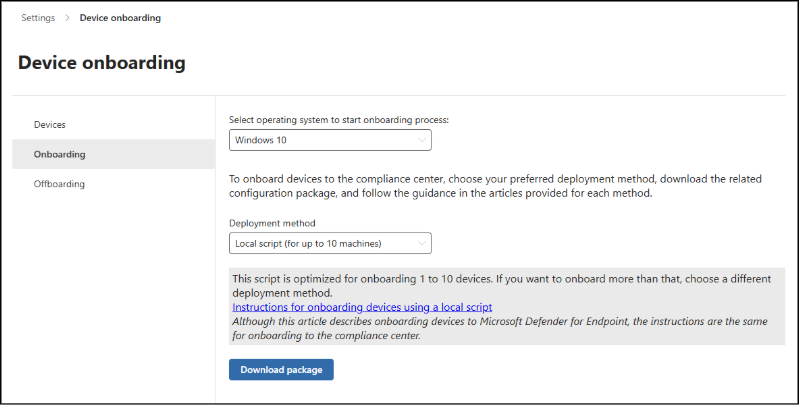

To onboard devices, navigate to Microsoft Purview -> Settings -> Device Onboarding.

- If your devices are already onboarded to Microsoft Defender for Endpoint, they will appear under: Microsoft Purview portal -> Settings -> Device onboarding -> Devices.

- For devices that haven’t been onboarded yet, ensure device onboarding is enabled before starting the onboarding process.

- Select your operating system, choose a deployment method, and download the corresponding onboarding package or script.

- Deploy the package on your target devices to complete onboarding.

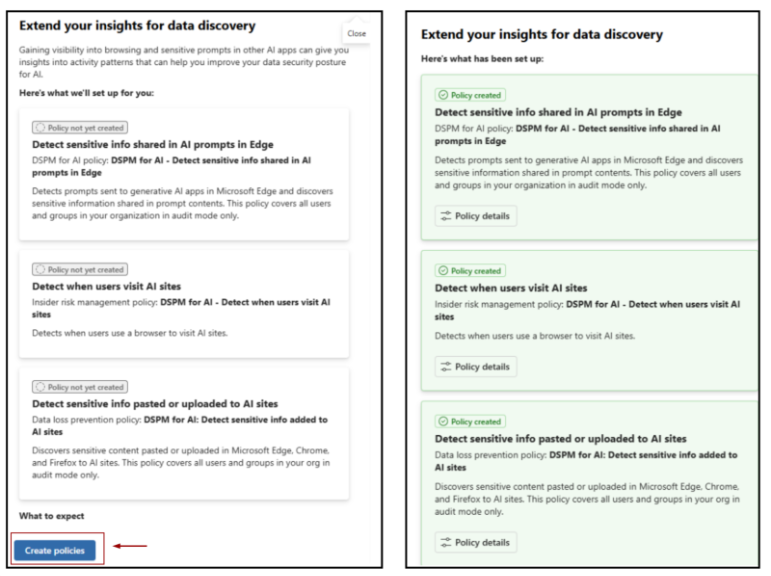

4. Extend Insights for Data Discovery: DSPM for AI provides one-click policies that help detect and prevent sensitive data exposure when users interact with AI tools or websites. To implement these policies, simply click “Create Policies” and apply them immediately.

How to Use DSPM For AI to Monitor AI Interactions in Microsoft 365?

Once the above prerequisites are completed, you can start leveraging the powerful features that Microsoft DSPM for AI provides to secure your organization’s AI interactions. The platform offers several key capabilities to help you maintain visibility and control over sensitive data, including:

- Policy recommendations for AI security

- Find AI interaction reports and usage analytics

- Monitor AI applications and agent activity

- Detailed AI activity tracking and prompt analysis

- Data risk assessments for Copilot

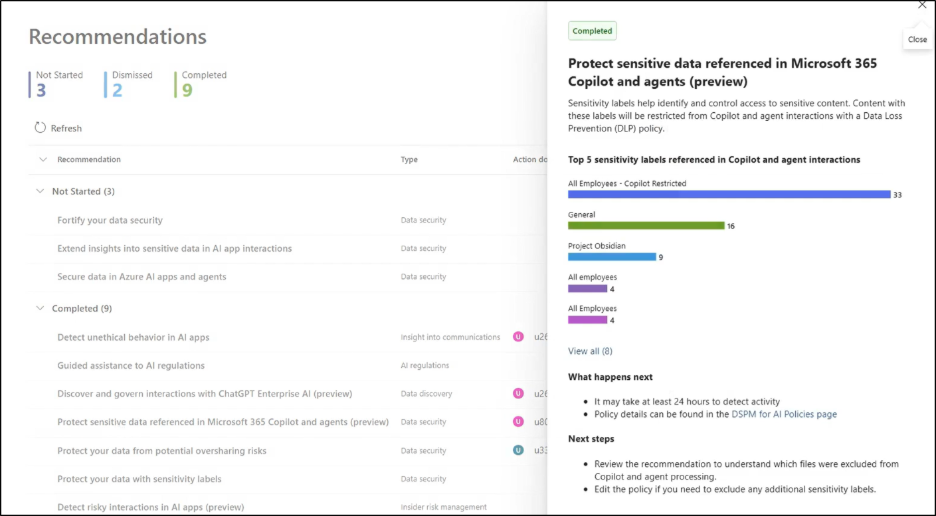

Policy Recommendations for AI Security:

The Recommendations page provides guidance with specific recommendations for different AI usage scenarios, such as data sharing, prompt inputs, and output handling. For each scenario, DSPM for AI suggests policies to help safeguard sensitive data during AI interactions. Some of the recommendations include:

- Running data risk assessments across SharePoint sites.

- Applying sensitivity labels and policies.

- Detect risky interactions in AI apps, like prompts containing sensitive data.

- Secure interactions for Microsoft Copilot experiences.

- Creating default policies that immediately help detect and prevent sensitive data from being shared with generative AI platforms.

- And more..

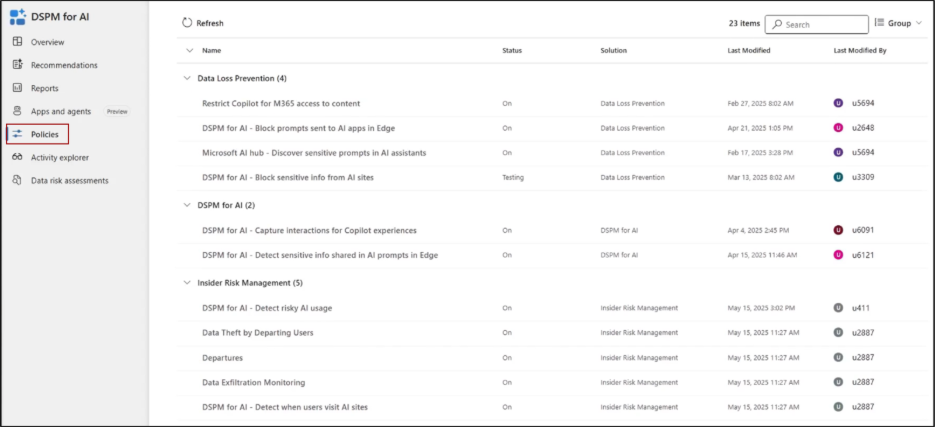

Once you apply the recommended policies, you can visit the Policies tab to view and manage them. Here, you can edit default one-click policies, AI-related policies, and all other policies created in your organization as part of recommendation remediation.

Note: On this page, you will also find additional policies that are not listed on the Recommendations page, as DSPM identifies them as relevant for managing AI applications.

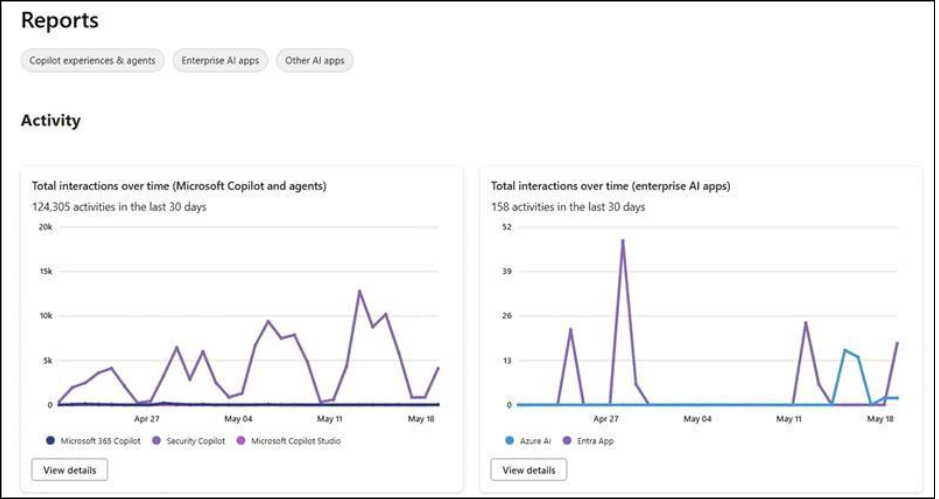

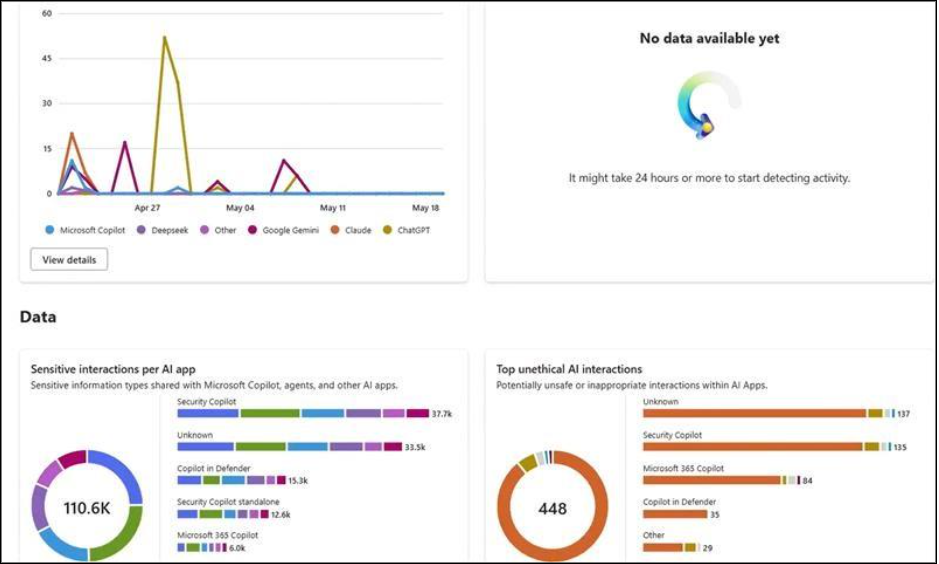

Find AI Interaction Reports and Usage Analytics:

Use the Reports section in the navigation pane to view the results of the default policies you created. This page provides insights into AI apps and agents, helping you understand how AI is being used and spot any potentially risky activities. Some of the available reports include:

- Total visits on AI apps

- Sensitive interactions per AI app

- Top unethical AI interactions

- Sensitive interactions by department (preview)

- Top sensitivity labels referenced in Microsoft 365 Copilot and agents

- Insider risk severity

- Insider risk severity per AI app

- Potential risky AI usage

You can filter the data by Copilot experiences and agents, Enterprise AI apps, or Other AI apps to drill into specific gen AI applications. These insights help you identify and block risky AI apps across your Microsoft 365 organization.

Note: It may take at least one day for the reports to populate.

In addition, you can also configure communication compliance policies in Microsoft Purview to find AI interactions.

Monitor AI Applications and Agent Activity:

This section displays all AI applications and agents within your organization. You can explore usage patterns, check which policies are applied, and view detailed user activity, response trends, and prompt trends.

Selecting a specific agent provides detailed insights, including:

- Alerts

- Instances of inappropriate behavior

- Associated data labels

For each agent, you can also review what sensitive information was accessed and how it is safeguarded through Microsoft Purview policies.

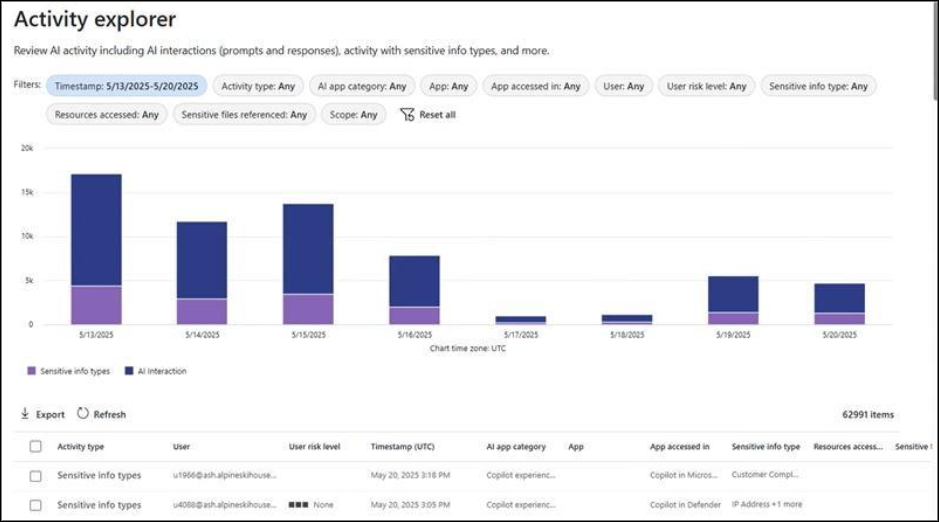

Detailed AI Activity Tracking and Prompt Analysis:

The Activity Explorer lets you examine detailed interactions with different AI apps and agents. Think of it like an audit log – you can see the actual prompts and responses, as well as any sensitive information involved in those interactions.

The details include:

- Type of activity and the user who performed it

- Date and time of the activity

- AI app category and specific app

- Location or app accessed

- Types of sensitive information involved

- Files referenced and sensitive files accessed

Examples of activities include AI interactions, sensitive information usage, and visits to AI websites. These insights help you detect unauthorized GenAI usage within your tenant and block GenAI using web content filtering to prevent further access or data exposure.

Note: Prompts and responses for AI interactions are visible when you have the appropriate permissions.

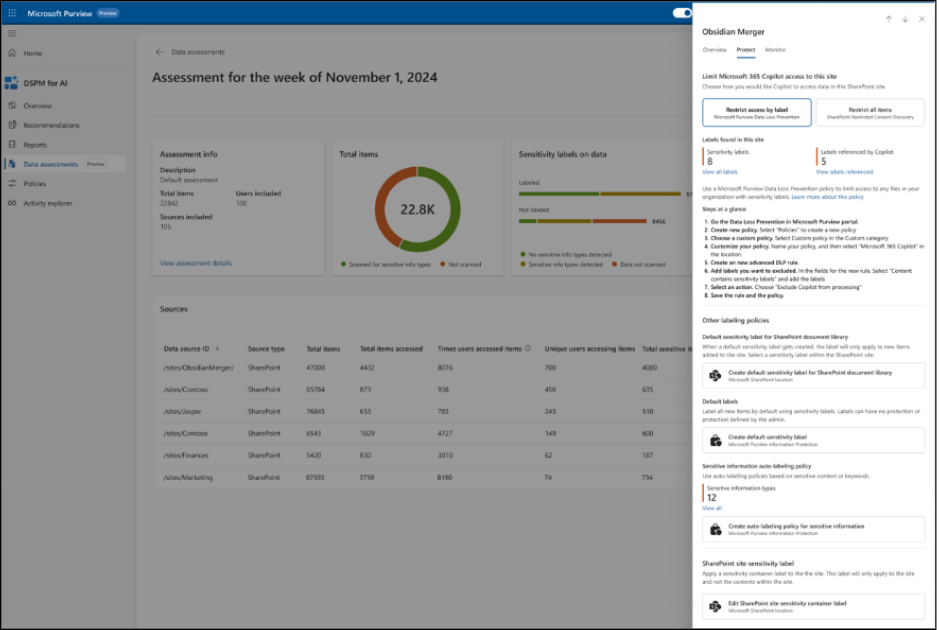

Data Risk Assessments for Copilot:

With Microsoft 365 Copilot being used for everyday tasks, there’s a risk of accidentally sharing sensitive information. Data risk assessments help identify overshared content by scanning files for sensitive data and detecting sites with overly broad user permissions. Users need the appropriate Microsoft 365 Copilot license to access these features.

These assessments provide a clear view of overshared data, potential risks, recommended actions, and detailed reports. By default, an assessment runs weekly on the top 100 SharePoint sites based on usage. These assessments highlight:

- Sensitive information detected

- Sensitivity labels applied to items

- How items are being shared

You can also create custom assessments to focus on specific users or sites. Results from a custom assessment appear after at least 48 hours and will not update until a new assessment is run.

When a default assessment is created for the first time, it takes approximately 4 days for results to appear. Both default and custom assessments offer an export option, allowing you to save data in Excel, CSV, JSON, or TSV formats.

After selecting View details, you can click on a site to open a pane with four tabs: Overview, Identify, Protect, and Monitor.

- Identify: Use on-demand classification to rescan existing items with new classifiers or scan items that haven’t been scanned.

- Protect: Prevent Copilot from accessing specific files by blocking content with certain sensitivity labels or restricting all items using restricted content discovery. Ensure sensitivity labels are configured with auto-labeling policies.

- Monitor: Conduct access reviews for SharePoint sites, assign responsibilities to site owners, and maintain oversight of sensitive content.

We hope this blog helps you get started with DSPM for AI. Thanks for reading. For more queries, feel free to reach us through the comments section.